pbcore.io.dataset¶

The Python DataSet XML API is designed to be a lightweight interface for creating, opening, manipulating and writing DataSet XML files. It provides both a native Python API and console entry points for use in manual dataset curation or as a resource for P_Module developers.

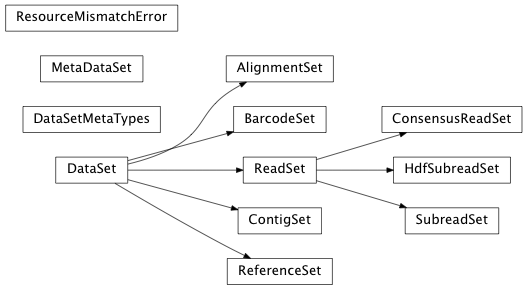

The API and console entry points are designed with the set operations one might perform on the various types of data held by a DataSet XML in mind: merge, split, write etc. While various types of DataSets can be found in XML files, the API (and in a way the console entry point, dataset.py) has DataSet as its base type, with various subtypes extending or replacing functionality as needed.

Console Entry Point Usage¶

The following entry points are available through the main script: dataset.py:

usage: dataset.py [-h] [-v] [--debug]

{create,filter,merge,split,validate,loadstats,consolidate}

...

Run dataset.py by specifying a command.

optional arguments:

-h, --help show this help message and exit

-v, --version show program's version number and exit

--debug Turn on debug level logging

DataSet sub-commands:

{create,filter,merge,split,validate,loadstats,consolidate}

Type {command} -h for a command's options

Create:

usage: dataset.py create [-h] [--type DSTYPE] [--novalidate] [--relative]

outfile infile [infile ...]

Create an XML file from a fofn or bam

positional arguments:

outfile The XML to create

infile The fofn or BAM file(s) to make into an XML

optional arguments:

-h, --help show this help message and exit

--type DSTYPE The type of XML to create

--novalidate Don't validate the resulting XML, don't touch paths

--relative Make the included paths relative instead of absolute (not

compatible with --novalidate)

Filter:

usage: dataset.py filter [-h] infile outfile filters [filters ...]

Add filters to an XML file

positional arguments:

infile The xml file to filter

outfile The resulting xml file

filters The values and thresholds to filter (e.g. rq>0.85)

optional arguments:

-h, --help show this help message and exit

Union:

usage: dataset.py union [-h] outfile infiles [infiles ...]

Combine XML (and BAM) files

positional arguments:

outfile The resulting XML file

infiles The XML files to merge

optional arguments:

-h, --help show this help message and exit

Validate:

usage: dataset.py validate [-h] infile

Validate ResourceId files (XML validation only available in testing)

positional arguments:

infile The XML file to validate

optional arguments:

-h, --help show this help message and exit

Load PipeStats:

usage: dataset.py loadstats [-h] [--outfile OUTFILE] infile statsfile

Load an sts.xml file into a DataSet XML file

positional arguments:

infile The XML file to modify

statsfile The .sts.xml file to load

optional arguments:

-h, --help show this help message and exit

--outfile OUTFILE The XML file to output

Split:

usage: dataset.py split [-h] [--contigs] [--chunks CHUNKS] [--subdatasets]

[--outdir OUTDIR]

infile ...

Split the dataset

positional arguments:

infile The xml file to split

outfiles The resulting xml files

optional arguments:

-h, --help show this help message and exit

--contigs Split on contigs

--chunks CHUNKS Split contigs into <chunks> total windows

--subdatasets Split on subdatasets

--outdir OUTDIR Specify an output directory

Consolidation requires in-depth filtering and BAM file manipulation. Implementation plans TBD.

Usage Examples¶

Resequencing Pipeline (CLI version)¶

In this scenario, we have two movies worth of subreads in two SubreadSets that we want to align to a reference, merge together, split into DataSet chunks by contig, then send through quiver on a chunkwise basis (in parallel).

Align each movie to the reference, producing a dataset with one bam file for each execution:

pbalign movie1.subreadset.xml referenceset.xml movie1.alignmentset.xml pbalign movie2.subreadset.xml referenceset.xml movie2.alignmentset.xml

Merge the files into a FOFN-like dataset (bams aren’t touched):

# dataset.py merge <out_fn> <in_fn> [<in_fn> <in_fn> ...] dataset.py merge merged.alignmentset.xml movie1.alignmentset.xml movie2.alignmentset.xml

Split the dataset into chunks by contig (rname) (bams aren’t touched). Note that supplying output files splits the dataset into that many output files (up to the number of contigs), with multiple contigs per file. Not supplying output files splits the dataset into one output file per contig, named automatically. Specifying a number of chunks instead will produce that many files, with contig or even sub contig (reference window) splitting.:

dataset.py split --contigs --chunks 8 merged.alignmentset.xml

Quiver then consumes these chunks:

variantCaller.py --alignmentSetRefWindows --referenceFileName referenceset.xml --outputFilename chunk1consensus.fasta --algorithm quiver chunk1contigs.alignmentset.xml variantCaller.py --alignmentSetRefWindows --referenceFileName referenceset.xml --outputFilename chunk2consensus.fasta --algorithm quiver chunk2contigs.alignmentset.xml

The chunking works by duplicating the original merged dataset (no bam duplication) and adding filters to each duplicate such that only reads belonging to the appropriate contigs are emitted. The contigs are distributed amongst the output files in such a way that the total number of records per chunk is about even.

Tangential Information¶

DataSet.refNames (which returns a list of reference names available in the dataset) is also subject to the filtering imposed during the split. Therefore you wont be running through superfluous (and apparently unsampled) contigs to get the reads in this chunk. The DataSet.records generator is also subject to filtering, but not as efficiently as readsInRange. If you do not have a reference window, readsInReference() is also an option.

As the bam files are never touched, each dataset contains all the information necessary to access all reads for all contigs. Doing so on these filtered datasets would require disabling the filters first:

dset.disableFilters()

Or removing the specific filter giving you problems:

dset.filters.removeRequirement('rname')

Resequencing Pipeline (API version)¶

In this scenario, we have two movies worth of subreads in two SubreadSets that we want to align to a reference, merge together, split into DataSet chunks by contig, then send through quiver on a chunkwise basis (in parallel). We want to do them using the API, rather than the CLI.

Align each movie to the reference, producing a dataset with one bam file for each execution

# CLI (or see pbalign API): pbalign movie1.subreadset.xml referenceset.xml movie1.alignmentset.xml pbalign movie2.subreadset.xml referenceset.xml movie2.alignmentset.xml

Merge the files into a FOFN-like dataset (bams aren’t touched)

# API, filename_list is dummy data: filename_list = ['movie1.alignmentset.xml', 'movie2.alignmentset.xml'] # open: dsets = [AlignmentSet(fn) for fn in filename_list] # merge with + operator: dset = reduce(lambda x, y: x + y, dsets) # OR: dset = AlignmentSet(*filename_list)

Split the dataset into chunks by contigs (or subcontig windows)

# split: dsets = dset.split(contigs=True, chunks=8)

Quiver then consumes these chunks

# write out if you need to (or pass directly to quiver API): outfilename_list = ['chunk1contigs.alignmentset.xml', 'chunk2contigs.alignmentset.xml'] # write with 'write' method: map(lambda (ds, nm): ds.write(nm), zip(dsets, outfilename_list)) # CLI (or see quiver API): variantCaller.py --alignmentSetRefWindows --referenceFileName referenceset.xml --outputFilename chunk1consensus.fasta --algorithm quiver chunk1contigs.alignmentset.xml variantCaller.py --alignmentSetRefWindows --referenceFileName referenceset.xml --outputFilename chunk2consensus.fasta --algorithm quiver chunk2contigs.alignmentset.xml # Inside quiver (still using python dataset API): aln = AlignmentSet(fname) # get this set's windows: refWindows = aln.refWindows # gather the reads for these windows using readsInRange, e.g.: reads = list(itertools.chain(aln.readsInRange(rId, start, end) for rId, start, end in refWindows))

API overview¶

The chunking works by duplicating the original merged dataset (no bam duplication) and adding filters to each duplicate such that only reads belonging to the appropriate contigs/windows are emitted. The contigs are distributed amongst the output files in such a way that the total number of records per chunk is about even.

DataSets can be created using the appropriate constructor (SubreadSet), or with the common constructor (DataSet) and later cast to a specific type (copy(asType=”SubreadSet”)). The DataSet constructor acts as a factory function (an artifact of early api Designs). The factory behavior is defined in the DataSet metaclass.

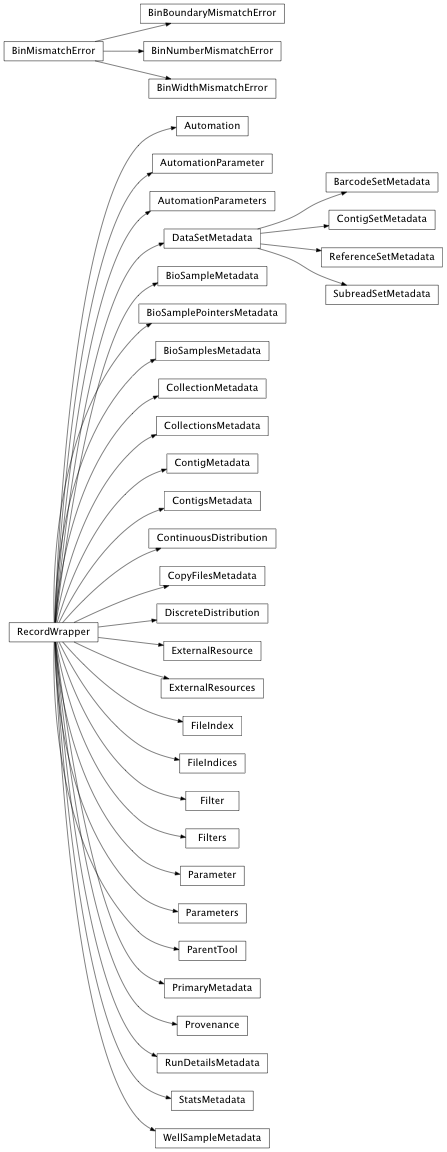

Classes representing the elements of the DataSet type

These classes are often instantiated by the parser and passed to the DataSet, where they are stored, manipulated, filtered, merged, etc.

-

class

pbcore.io.dataset.DataSetIO.AlignmentSet(*files)¶ Bases:

pbcore.io.dataset.DataSetIO.DataSetDataSet type specific to Alignments. No type specific Metadata exists, so the base class version is OK (this just ensures type representation on output and expandability

-

records¶ The records in this AlignmentSet, sorted by tStart.

-

-

class

pbcore.io.dataset.DataSetIO.BarcodeSet(*files)¶ Bases:

pbcore.io.dataset.DataSetIO.DataSetDataSet type specific to Barcodes

-

addMetadata(newMetadata, **kwargs)¶ Add metadata specific to this subtype, while leaning on the superclass method for generic metadata. Also enforce metadata type correctness.

-

-

class

pbcore.io.dataset.DataSetIO.ConsensusReadSet(*files)¶ Bases:

pbcore.io.dataset.DataSetIO.ReadSetDataSet type specific to CCSreads. No type specific Metadata exists, so the base class version is OK (this just ensures type representation on output and expandability

- Doctest:

>>> import pbcore.data.datasets as data >>> from pbcore.io import DataSet, ConsensusReadSet >>> ds1 = DataSet(data.getXml(2)) >>> ds1 <ConsensusReadSet... >>> ds1._metadata <SubreadSetMetadata... >>> ds2 = ConsensusReadSet(data.getXml(2)) >>> ds2 <ConsensusReadSet... >>> ds2._metadata <SubreadSetMetadata...

-

class

pbcore.io.dataset.DataSetIO.ContigSet(*files)¶ Bases:

pbcore.io.dataset.DataSetIO.DataSetDataSet type specific to Contigs

-

addMetadata(newMetadata, **kwargs)¶ Add metadata specific to this subtype, while leaning on the superclass method for generic metadata. Also enforce metadata type correctness.

-

-

class

pbcore.io.dataset.DataSetIO.DataSet(*files)¶ Bases:

objectThe record containing the DataSet information, with possible type specific subclasses

-

__add__(otherDataset)¶ Merge the representations of two DataSets without modifying the original datasets. (Fails if filters are incompatible).

- Args:

- otherDataset: a DataSet to merge with self

- Returns:

- A new DataSet with members containing the union of the input DataSets’ members and subdatasets representing the input DataSets

- Doctest:

>>> import pbcore.data.datasets as data >>> from pbcore.io import AlignmentSet >>> from pbcore.io.dataset.DataSetWriter import toXml >>> # xmls with different resourceIds: success >>> ds1 = AlignmentSet(data.getXml(no=8)) >>> ds2 = AlignmentSet(data.getXml(no=11)) >>> ds3 = ds1 + ds2 >>> expected = ds1.numExternalResources + ds2.numExternalResources >>> ds3.numExternalResources == expected True >>> # xmls with different resourceIds but conflicting filters: >>> # failure to merge >>> ds2.filters.addRequirement(rname=[('=', 'E.faecalis.1')]) >>> ds3 = ds1 + ds2 >>> ds3 >>> # xmls with same resourceIds: ignores new inputs >>> ds1 = AlignmentSet(data.getXml(no=8)) >>> ds2 = AlignmentSet(data.getXml(no=8)) >>> ds3 = ds1 + ds2 >>> expected = ds1.numExternalResources >>> ds3.numExternalResources == expected True

-

__deepcopy__(memo)¶ Deep copy this Dataset by recursively deep copying the members (objMetadata, DataSet metadata, externalResources, filters and subdatasets)

-

__eq__(other)¶ Test for DataSet equality. The method specified in the documentation calls for md5 hashing the “Core XML” elements and comparing. This is the same procedure for generating the Uuid, so the same method may be used. However, as simultaneously or regularly updating the Uuid is not specified, we opt to not set the newUuid when checking for equality.

- Args:

- other: The other DataSet to compare to this DataSet.

- Returns:

- T/F the Core XML elements of this and the other DataSet hash to the same value

-

__init__(*files)¶ DataSet constructor

Initialize representations of the ExternalResources, MetaData, Filters, and LabeledSubsets, parse inputs if possible

- Args:

- (HANDLED BY METACLASS __call__) *files: one or more filenames or

- uris to read

- Doctest:

>>> import os, tempfile >>> import pbcore.data.datasets as data >>> from pbcore.io import DataSet, SubreadSet >>> # Prog like pbalign provides a .bam file: >>> # e.g. d = DataSet("aligned.bam") >>> # Something like the test files we have: >>> inBam = data.getBam() >>> inBam.endswith('.bam') True >>> d = DataSet(inBam) >>> # A UniqueId is generated, despite being a BAM input >>> bool(d.uuid) True >>> dOldUuid = d.uuid >>> # They can write this BAM to an XML: >>> # e.g. d.write("alignmentset.xml") >>> outdir = tempfile.mkdtemp(suffix="dataset-doctest") >>> outXml = os.path.join(outdir, 'tempfile.xml') >>> d.write(outXml) >>> # And then recover the same XML: >>> d = DataSet(outXml) >>> # The UniqueId will be the same >>> d.uuid == dOldUuid True >>> # Inputs can be many and varied >>> ds1 = DataSet(data.getXml(8), data.getBam(1)) >>> ds1.numExternalResources 2 >>> ds1 = DataSet(data.getFofn()) >>> ds1.numExternalResources 2 >>> # DataSet types are autodetected: >>> DataSet(data.getSubreadSet()) <SubreadSet... >>> # But can also be used directly >>> SubreadSet(data.getSubreadSet()) <SubreadSet... >>> # Even with untyped inputs >>> DataSet(data.getBam()) <DataSet... >>> SubreadSet(data.getBam()) <SubreadSet... >>> # You can also cast up and down, but casting between siblings >>> # is limited (abuse at your own risk) >>> DataSet(data.getBam()).copy(asType='SubreadSet') ... <SubreadSet... >>> SubreadSet(data.getBam()).copy(asType='DataSet') ... <DataSet... >>> # DataSets can also be manipulated after opening: >>> # Add external Resources: >>> ds = DataSet() >>> _ = ds.externalResources.addResources(["IdontExist.bam"]) >>> ds.externalResources[-1].resourceId == "IdontExist.bam" True >>> # Add an index file >>> pbiName = "IdontExist.bam.pbi" >>> ds.externalResources[-1].addIndices([pbiName]) >>> ds.externalResources[-1].indices[0].resourceId == pbiName True

-

__metaclass__¶ alias of

MetaDataSet

-

__repr__()¶ Represent the dataset with an informative string:

- Returns:

- “<type uuid filenames>”

-

addDatasets(otherDataSet)¶ Add subsets to a DataSet object using other DataSets.

The following method of enabling merge-based split prevents nesting of datasets more than one deep. Nested relationships are flattened.

Note

Most often used by the __add__ method, rather than directly.

-

addExternalResources(newExtResources)¶ Add additional ExternalResource objects, ensuring no duplicate resourceIds. Most often used by the __add__ method, rather than directly.

- Args:

- newExtResources: A list of new ExternalResource objects, either

- created de novo from a raw bam input, parsed from an xml input, or already contained in a separate DataSet object and being merged.

- Doctest:

>>> from pbcore.io.dataset.DataSetMembers import ExternalResource >>> from pbcore.io import DataSet >>> ds = DataSet() >>> # it is possible to add ExtRes's as ExternalResource objects: >>> er1 = ExternalResource() >>> er1.resourceId = "test1.bam" >>> er2 = ExternalResource() >>> er2.resourceId = "test2.bam" >>> er3 = ExternalResource() >>> er3.resourceId = "test1.bam" >>> ds.addExternalResources([er1]) >>> len(ds.externalResources) 1 >>> # different resourceId: succeeds >>> ds.addExternalResources([er2]) >>> len(ds.externalResources) 2 >>> # same resourceId: fails >>> ds.addExternalResources([er3]) >>> len(ds.externalResources) 2 >>> # but it is probably better to add them a little deeper: >>> ds.externalResources.addResources( ... ["test3.bam"])[0].addIndices(["test3.bam.bai"])

-

addFilters(newFilters)¶ Add new or extend the current list of filters. Public because there is already a reasonably compelling reason (the console script entry point). Most often used by the __add__ method.

- Args:

- newFilters: a Filters object or properly formatted Filters record

- Doctest:

>>> import pbcore.data.datasets as data >>> from pbcore.io import DataSet >>> from pbcore.io.dataset.DataSetMembers import Filters >>> ds1 = DataSet() >>> filt = Filters() >>> filt.addRequirement(rq=[('>', '0.85')]) >>> ds1.addFilters(filt) >>> print ds1.filters ( rq > 0.85 ) >>> # Or load with a DataSet >>> ds2 = DataSet(data.getXml(16)) >>> print ds2.filters ... ( rname = E.faecalis...

-

addMetadata(newMetadata, **kwargs)¶ Add dataset metadata.

Currently we ignore duplicates while merging (though perhaps other transformations are more appropriate) and plan to remove/merge conflicting metadata with identical attribute names.

All metadata elements should be strings, deepcopy shouldn’t be necessary.

This method is most often used by the __add__ method, rather than directly.

- Args:

- newMetadata: a dictionary of object metadata from an XML file (or

- carefully crafted to resemble one), or a wrapper around said dictionary

- kwargs: new metadata fields to be piled into the current metadata

- (as an attribute)

- Doctest:

>>> import pbcore.data.datasets as data >>> from pbcore.io import DataSet >>> ds = DataSet() >>> # it is possible to add new metadata: >>> ds.addMetadata(None, Name='LongReadsRock') >>> print ds._metadata.getV(container='attrib', tag='Name') LongReadsRock >>> # but most will be loaded and modified: >>> ds2 = DataSet(data.getXml(no=8)) >>> ds2._metadata.totalLength 123588 >>> ds2._metadata.totalLength = 100000 >>> ds2._metadata.totalLength 100000 >>> ds2._metadata.totalLength += 100000 >>> ds2._metadata.totalLength 200000 >>> #TODO: renable when up to date sts.xml test files available >>> #ds3 = DataSet(data.getXml(no=8)) >>> #ds3.loadStats(data.getStats()) >>> #ds4 = DataSet(data.getXml(no=11)) >>> #ds4.loadStats(data.getStats()) >>> #ds5 = ds3 + ds4

-

close()¶ Close all of the opened resource readers

-

copy(asType=None)¶ Deep copy the representation of this DataSet

- Args:

- asType: The type of DataSet to return, e.g. ‘AlignmentSet’

- Returns:

- A DataSet object that is identical but for UniqueId

- Doctest:

>>> import pbcore.data.datasets as data >>> from pbcore.io import DataSet, SubreadSet >>> ds1 = DataSet(data.getXml()) >>> # Deep copying datasets is easy: >>> ds2 = ds1.copy() >>> # But the resulting uuid's should be different. >>> ds1 == ds2 False >>> ds1.uuid == ds2.uuid False >>> ds1 is ds2 False >>> # Most members are identical >>> ds1.name == ds2.name True >>> ds1.externalResources == ds2.externalResources True >>> ds1.filters == ds2.filters True >>> ds1.subdatasets == ds2.subdatasets True >>> len(ds1.subdatasets) == 2 True >>> len(ds2.subdatasets) == 2 True >>> # Except for the one that stores the uuid: >>> ds1.objMetadata == ds2.objMetadata False >>> # And of course identical != the same object: >>> assert not reduce(lambda x, y: x or y, ... [ds1d is ds2d for ds1d in ... ds1.subdatasets for ds2d in ... ds2.subdatasets]) >>> # But types are maintained: >>> ds1 = DataSet(data.getXml(no=10)) >>> ds1.metadata <SubreadSetMetadata... >>> ds2 = ds1.copy() >>> ds2.metadata <SubreadSetMetadata... >>> # Lets try casting >>> ds1 = DataSet(data.getBam()) >>> ds1 <DataSet... >>> ds1 = ds1.copy(asType='SubreadSet') >>> ds1 <SubreadSet... >>> # Lets do some illicit casting >>> ds1 = ds1.copy(asType='ReferenceSet') Traceback (most recent call last): TypeError: Cannot cast from SubreadSet to ReferenceSet >>> # Lets try not having to cast >>> ds1 = SubreadSet(data.getBam()) >>> ds1 <SubreadSet...

-

countRecords(rname=None, window=None)¶ Count the number of records mapped to ‘rname’ that overlap with ‘window’

-

disableFilters()¶ Disable read filtering for this object

-

enableFilters()¶ Re-enable read filtering for this object

-

filters¶ Limit setting to ensure cache hygiene and filter compatibility

-

fullRefNames¶ A list of reference full names (full header).

-

hasPbi¶ Test whether all resources are opened as IndexedBamReader objects

-

indexRecords¶ Return a recarray summarizing all of the records in all of the resources that conform to those filters addressing parameters cached in the pbi.

-

isCmpH5¶ Test whether all resources are cmp.h5 files

-

loadStats(filename)¶ Load pipeline statistics from a <moviename>.sts.xml file. The subset of these data that are defined in the DataSet XSD become available through via DataSet.metadata.summaryStats.<...> and will be written out to the DataSet XML format according to the DataSet XML XSD.

- Args:

- filename: the filename of a <moviename>.sts.xml file

- Doctest:

>>> import pbcore.data.datasets as data >>> from pbcore.io import AlignmentSet >>> ds1 = AlignmentSet(data.getXml(8)) >>> # TODO: renable when up to date sts.xml test files available >>> #ds1.loadStats(data.getStats()) >>> #ds2 = AlignmentSet(data.getXml(11)) >>> #ds2.loadStats(data.getStats()) >>> #ds3 = ds1 + ds2 >>> #ds1.metadata.summaryStats.prodDist.bins >>> #[1576, 901, 399, 0] >>> #ds2.metadata.summaryStats.prodDist.bins >>> #[1576, 901, 399, 0] >>> #ds3.metadata.summaryStats.prodDist.bins >>> #[3152, 1802, 798, 0]

-

makePathsAbsolute(curStart='.')¶ As part of the validation process, make all ResourceIds absolute URIs rather than relative paths. Generally not called by API users.

- Args:

- curStart: The location from which relative paths should emanate.

-

makePathsRelative(outDir=False)¶ Make things easier for writing test cases: make all ResourceIds relative paths rather than absolute paths. A less common use case for API consumers.

- Args:

- outDir: The location from which relative paths should originate

-

metadata¶ Return the DataSet metadata as a DataSetMetadata object. Attributes should be populated intuitively, but see DataSetMetadata documentation for more detail.

-

name¶ The name of this DataSet

-

newUuid(setter=True)¶ Generate and enforce the uniqueness of an ID for a new DataSet. While user setable fields are stripped out of the Core DataSet object used for comparison, the previous UniqueId is not. That means that copies will still be unique, despite having the same contents.

- Args:

- setter=True: Setting to False allows MD5 hashes to be generated

- (e.g. for comparison with other objects) without modifying the object’s UniqueId

- Returns:

- The new Id, a properly formatted md5 hash of the Core DataSet

- Doctest:

>>> from pbcore.io import AlignmentSet >>> ds = AlignmentSet() >>> old = ds.uuid >>> _ = ds.newUuid() >>> old != ds.uuid True

-

numExternalResources¶ The number of ExternalResources in this DataSet

-

numRecords¶ The number of records in this DataSet (from the metadata)

-

processFilters()¶ Generate a list of functions to apply to a read, all of which return T/F. Each function is an OR filter, so any() true passes the read. These functions are the AND filters, and will likely check all() of other functions. These filtration functions are cached so that they are not regenerated from the base filters for every read

-

reFilter()¶ The filters on this dataset have changed, update DataSet state as needed

-

readGroupTable¶ Combine the readGroupTables of each external resource

-

readsInRange(dset, *args, **kwargs)¶ A generator of (usually) BamAlignment objects for the reads in one or more Bam files pointed to by the ExternalResources in this DataSet that have at least one coordinate within the specified range in the reference genome.

Rather than developing some convoluted approach for dealing with auto-inferring the desired references, this method and self.refNames should allow users to compose the desired query.

- Args:

refName: the name of the reference that we are sampling start: the start of the range (inclusive, index relative to

reference)end: the end of the range (inclusive, index relative to reference) justIndices: Not yet implemented

- Yields:

- BamAlignment objects

- Doctest:

>>> import pbcore.data.datasets as data >>> from pbcore.io import DataSet >>> ds = DataSet(data.getBam()) >>> for read in ds.readsInRange(ds.refNames[15], 100, 150): ... print 'hn: %i' % read.holeNumber hn: ...

-

readsInReference(dset, *args, **kwargs)¶ A generator of (usually) BamAlignment objects for the reads in one or more Bam files pointed to by the ExternalResources in this DataSet that are mapped to the specified reference genome.

- Args:

- refName: the name of the reference that we are sampling.

- Yields:

- BamAlignment objects

- Doctest:

>>> import pbcore.data.datasets as data >>> from pbcore.io import DataSet >>> ds = DataSet(data.getBam()) >>> for read in ds.readsInReference(ds.refNames[15]): ... print 'hn: %i' % read.holeNumber hn: ...

-

readsInSubDatasets(dset, *args, **kwargs)¶ To be used in conjunction with self.subSetNames

-

records¶ A generator of (usually) BamAlignment objects for the records in one or more Bam files pointed to by the ExternalResources in this DataSet.

- Yields:

- A BamAlignment object

- Doctest:

>>> import pbcore.data.datasets as data >>> from pbcore.io import DataSet >>> ds = DataSet(data.getBam()) >>> for record in ds.records: ... print 'hn: %i' % record.holeNumber hn: ...

-

refInfo(key)¶ The reference names present in the referenceInfoTable of the ExtResources.

- Args:

- key: a key for the referenceInfoTable of each resource

- Returns:

- A dictionary of refrence name: key_result pairs

-

refLength(rname)¶ The length of reference ‘rname’. This is expensive, so if you’re going to do many lookups cache self.refLengths locally and use that.

-

refLengths¶ A dict of refName: refLength

-

refNames¶ A list of reference names (id).

-

refWindows¶ Going to be tricky unless the filters are really focused on windowing the reference. Much nesting or duplication and the correct results are really not guaranteed

-

referenceInfo(refName)¶ Select a row from the DataSet.referenceInfoTable using the reference name as a unique key

-

referenceInfoTable¶ The merged reference info tables from the external resources. Record.ID is remapped to a unique integer key (though using record.Name is preferred). Record.Names are remapped for cmp.h5 files to be consistent with bam files.

-

resourceReaders(refName=False)¶ A generator of Indexed*Reader objects for the ExternalResources in this DataSet.

- Args:

- refName: Only yield open resources if they have refName in their

- referenceInfoTable

- Yields:

- An open indexed alignment file

- Doctest:

>>> import pbcore.data.datasets as data >>> from pbcore.io import DataSet >>> ds = DataSet(data.getBam()) >>> for seqFile in ds.resourceReaders(): ... for record in seqFile: ... print 'hn: %i' % record.holeNumber hn: ...

-

split(chunks=0, ignoreSubDatasets=False, contigs=False)¶ Deep copy the DataSet into a number of new DataSets containing roughly equal chunks of the ExternalResources or subdatasets.

- Args:

- chunks: the number of chunks to split the DataSet. When chunks=0,

- create one DataSet per subdataset, or failing that ExternalResource

- ignoreSubDatasets: F/T (False) do not split on datasets, only split

- on ExternalResources

- contigs: (False) split on contigs instead of external resources or

- subdatasets

- Returns:

- A list of new DataSet objects (all other information deep copied).

- Doctest:

>>> import pbcore.data.datasets as data >>> from pbcore.io import AlignmentSet >>> # splitting is pretty intuitive: >>> ds1 = AlignmentSet(data.getXml()) >>> # but divides up extRes's, so have more than one: >>> ds1.numExternalResources > 1 True >>> # the default is one AlignmentSet per ExtRes: >>> dss = ds1.split() >>> len(dss) == ds1.numExternalResources True >>> # but you can specify a number of AlignmentSets to produce: >>> dss = ds1.split(chunks=1) >>> len(dss) == 1 True >>> dss = ds1.split(chunks=2, ignoreSubDatasets=True) >>> len(dss) == 2 True >>> # The resulting objects are similar: >>> dss[0].uuid == dss[1].uuid False >>> dss[0].name == dss[1].name True >>> # Previously merged datasets are 'unmerged' upon split, unless >>> # otherwise specified. >>> # Lets try merging and splitting on subdatasets: >>> ds1 = AlignmentSet(data.getXml(8)) >>> ds1.totalLength 123588 >>> ds1tl = ds1.totalLength >>> ds2 = AlignmentSet(data.getXml(11)) >>> ds2.totalLength 117086 >>> ds2tl = ds2.totalLength >>> # merge: >>> dss = ds1 + ds2 >>> dss.totalLength == (ds1tl + ds2tl) True >>> # unmerge: >>> ds1, ds2 = sorted( ... dss.split(2), key=lambda x: x.totalLength, reverse=True) >>> ds1.totalLength == ds1tl True >>> ds2.totalLength == ds2tl True

-

subSetNames¶ The subdataset names present in this DataSet

-

toExternalFiles()¶ Returns a list of top level external resources (no indices).

-

toFofn(outfn=None, uri=False, relative=False)¶ Return a list of resource filenames (and write to optional outfile)

- Args:

- outfn: (None) the file to which the resouce filenames are to be

- written. If None, the only emission is a returned list of file names.

uri: T/F (False) write the resource filenames as URIs. relative: (False) emit paths relative to outfofn or ‘.’ if no

outfofn- Returns:

- A list of filenames or uris

- Writes:

- (Optional) A file containing a list of filenames or uris

- Doctest:

>>> from pbcore.io import DataSet >>> DataSet("bam1.bam", "bam2.bam").toFofn(uri=False) ['bam1.bam', 'bam2.bam']

-

totalLength¶ The total length of this DataSet

-

uuid¶ The UniqueId of this DataSet

-

write(outFile, validate=True, relPaths=False)¶ Write to disk as an XML file

- Args:

outFile: The filename of the xml file to be created validate: T/F (True) validate the ExternalResource ResourceIds relPaths: T/F (False) make the ExternalResource ResourceIds

relative instead of absolute filenames- Doctest:

>>> import pbcore.data.datasets as data >>> from pbcore.io import DataSet >>> import tempfile, os >>> outdir = tempfile.mkdtemp(suffix="dataset-doctest") >>> outfile = os.path.join(outdir, 'tempfile.xml') >>> ds1 = DataSet(data.getXml()) >>> ds1.write(outfile, validate=False) >>> ds2 = DataSet(outfile) >>> ds1 == ds2 True

-

-

class

pbcore.io.dataset.DataSetIO.DataSetMetaTypes¶ Bases:

objectThis mirrors the PacBioSecondaryDataModel.xsd definitions and be used to reference a specific dataset type.

-

class

pbcore.io.dataset.DataSetIO.MetaDataSet¶ Bases:

typeThis metaclass acts as a factory for DataSet and subtypes, intercepting constructor calls and returning a DataSet or subclass instance as appropriate (inferred from file contents).

-

__call__(*files)¶ Factory function for DataSet and subtypes

- Args:

- files: one or more files to parse

- Returns:

- A dataset (or subtype) object.

-

-

class

pbcore.io.dataset.DataSetIO.ReadSet(*files)¶ Bases:

pbcore.io.dataset.DataSetIO.DataSetBase type for read sets, should probably never be used as a concrete class

-

addMetadata(newMetadata, **kwargs)¶ Add metadata specific to this subtype, while leaning on the superclass method for generic metadata. Also enforce metadata type correctness.

-

-

class

pbcore.io.dataset.DataSetIO.ReferenceSet(*files)¶ Bases:

pbcore.io.dataset.DataSetIO.DataSetDataSet type specific to References

-

addMetadata(newMetadata, **kwargs)¶ Add metadata specific to this subtype, while leaning on the superclass method for generic metadata. Also enforce metadata type correctness.

-

contigs¶ A generator of contigs from the fastaReader objects for the ExternalResources in this ReferenceSet.

- Yields:

- A fasta file entry

- Doctest:

>>> # Either way: >>> import pbcore.data.datasets as data >>> from pbcore.io import DataSet >>> ds = DataSet(data.getBam()) >>> for seqFile in ds.resourceReaders(): ... for row in seqFile: ... print 'hn: %i' % row.holeNumber hn: ...

-

get_contig(contig_id)¶ Get a contig by ID

-

refNames¶ The reference names assigned to the External Resources, or contigs, if no name assigned.

-

resourceReaders(refName=None)¶ A generator of fastaReader objects for the ExternalResources in this ReferenceSet.

- Yields:

- An open fasta file

- Doctest:

>>> # Either way: >>> import pbcore.data.datasets as data >>> from pbcore.io import DataSet >>> ds = DataSet(data.getBam()) >>> for seqFile in ds.resourceReaders(): ... for row in seqFile: ... print 'hn: %i' % row.holeNumber hn: ...

-

-

class

pbcore.io.dataset.DataSetIO.SubreadSet(*files)¶ Bases:

pbcore.io.dataset.DataSetIO.ReadSetDataSet type specific to Subreads

DocTest:

>>> from pbcore.io import DataSet, SubreadSet >>> from pbcore.io.dataset.DataSetMembers import ExternalResources >>> import pbcore.data.datasets as data >>> ds1 = DataSet(data.getXml(no=5)) >>> ds2 = DataSet(data.getXml(no=5)) >>> # So they don't conflict: >>> ds2.externalResources = ExternalResources() >>> ds1 <SubreadSet... >>> ds1._metadata <SubreadSetMetadata... >>> ds1._metadata <SubreadSetMetadata... >>> ds1.metadata <SubreadSetMetadata... >>> len(ds1.metadata.collections) 1 >>> len(ds2.metadata.collections) 1 >>> ds3 = ds1 + ds2 >>> len(ds3.metadata.collections) 2 >>> ds4 = SubreadSet(data.getSubreadSet()) >>> ds4 <SubreadSet... >>> ds4._metadata <SubreadSetMetadata... >>> len(ds4.metadata.collections) 1

-

pbcore.io.dataset.DataSetIO.filtered(generator)¶ Wrap a generator with postfiltering

-

pbcore.io.dataset.DataSetIO.openDataSet(*files)¶ Generic factory function to open DataSets (or attempt to open a subtype if one can be inferred from the file(s).

A replacement for the generic DataSet(*files) constructor

The operations possible between DataSets of the same and different types are limited, see the DataSet XML documentation for details.

DataSet XML files have a few major components: XML metadata, ExternalReferences, Filters, DataSet Metadata, etc. These are represented in different ways internally, depending on their complexity. DataSet metadata especially contains a large number of different potential elements, many of which are accessible in the API as nested attributes. To preserve the API’s ability to grant access to any DataSet Metadata available now and in the future, as well as to maintain the performance of dataset reading and writing, each DataSet stores its metadata in what approximates a tree structure, with various helper classes and functions manipulating this tree. The structure of this tree and currently implemented helper classes are available in the DataSetMembers module.

DataSetMetadata (also the tag of the Element in the DataSet XML representation) is somewhat challening to store, access, and (de)serialize efficiently. Here, we maintain a bulk representation of all of the dataset metadata (or any other XML data, like ExternalResources) found in the XML file in the following data structure:

- An Element is a turned into a dictionary:

- XmlElement => {‘tag’: ‘ElementTag’,

‘text’: ‘ElementText’, ‘attrib’: {‘ElementAttributeName’: ‘AttributeValue’,

‘AnotherAttrName’: ‘AnotherAttrValue’},

- ‘children’: [XmlElementDict,

- XmlElementDictWithSameOrDifferentTag]}

Child elements are represented similarly and stored (recursively) as a list in ‘children’. The top level we store for DataSetMetadata is just a list, which can be thought of as the list of children of a different element (say, a DataSet or SubreadSet element, if we stored that):

DataSetMetadata = [XmlTag, XmlTagWithSameOrDifferentTag]

- We keep this for two reasons:

- We don’t want to have to write a lot of logic to go from XML to an internal representation and then back to XML.

- We want to be able to store and at least write metadata that doesn’t yet exist, even if we can’t merge it intelligently.

- Keeping and manipulating a dictionary is ~10x faster than an OrderedAttrDict, and probably faster to use than a full stack of objects.

Instead, we keep and modify this list:dictionary structure, wrapping it in classes as necessary. The classes and methods that wrap this datastructure serve two pruposes:

- Provide an interface for our code (and making merging clean) e.g.:

- DataSet(“test.xml”).metadata.numRecords += 1

- Provide an interface for users of the DataSet API, e.g.:

numRecords = DataSet(“test.xml”).metadata.numRecords

- bioSamplePointer = (DataSet(“test.xml”)

.metadata.collections[0] .wellSample.bioSamplePointers[0])

Though users can still access novel metadata types the hard way e.g.:

- bioSamplePointer = (DataSet(“test.xml”)

.metadata.collections[0] [‘WellSample’][‘BioSamplePointers’] [‘BioSamplePointer’].record[‘text’])

note: Assuming __getitem__ is implemented for the ‘children’ list

-

class

pbcore.io.dataset.DataSetMembers.Automation(record=None)¶ Bases:

pbcore.io.dataset.DataSetMembers.RecordWrapper-

automationParameters¶

-

-

class

pbcore.io.dataset.DataSetMembers.AutomationParameter(record=None)¶

-

class

pbcore.io.dataset.DataSetMembers.AutomationParameters(record=None)¶ Bases:

pbcore.io.dataset.DataSetMembers.RecordWrapper-

addParameter(key, value)¶

-

automationParameter¶

-

-

class

pbcore.io.dataset.DataSetMembers.BarcodeSetMetadata(record=None)¶ Bases:

pbcore.io.dataset.DataSetMembers.DataSetMetadataThe DataSetMetadata subtype specific to BarcodeSets.

-

barcodeConstruction¶

-

-

exception

pbcore.io.dataset.DataSetMembers.BinBoundaryMismatchError(min1, min2)¶

-

exception

pbcore.io.dataset.DataSetMembers.BinMismatchError¶ Bases:

exceptions.Exception

-

exception

pbcore.io.dataset.DataSetMembers.BinNumberMismatchError(num1, num2)¶

-

exception

pbcore.io.dataset.DataSetMembers.BinWidthMismatchError(width1, width2)¶

-

class

pbcore.io.dataset.DataSetMembers.BioSampleMetadata(record=None)¶ Bases:

pbcore.io.dataset.DataSetMembers.RecordWrapperThe metadata for a single BioSample

-

createdAt¶

-

uniqueId¶

-

-

class

pbcore.io.dataset.DataSetMembers.BioSamplePointersMetadata(record=None)¶ Bases:

pbcore.io.dataset.DataSetMembers.RecordWrapperThe BioSamplePointer members don’t seem complex enough to justify class representation, instead rely on base class methods to provide iterators and accessors

-

class

pbcore.io.dataset.DataSetMembers.BioSamplesMetadata(record=None)¶ Bases:

pbcore.io.dataset.DataSetMembers.RecordWrapperThe metadata for the list of BioSamples

- Doctest:

>>> from pbcore.io import DataSet >>> import pbcore.data.datasets as data >>> ds = DataSet(data.getSubreadSet()) >>> ds.metadata.bioSamples[0].name 'consectetur purus' >>> for bs in ds.metadata.bioSamples: ... print bs.name consectetur purus >>> em = {'tag':'BioSample', 'text':'', 'children':[], ... 'attrib':{'Name':'great biosample'}} >>> ds.metadata.bioSamples.extend([em]) >>> ds.metadata.bioSamples[1].name 'great biosample'

-

__getitem__(index)¶ Get a biosample

-

__iter__()¶ Iterate over biosamples

-

merge(other)¶

-

class

pbcore.io.dataset.DataSetMembers.CollectionMetadata(record=None)¶ Bases:

pbcore.io.dataset.DataSetMembers.RecordWrapperThe metadata for a single collection. It contains Context, InstrumentName etc. as attribs, InstCtrlVer etc. for children

-

automation¶

-

cellIndex¶

-

cellPac¶

-

collectionNumber¶

-

context¶

-

instCtrlVer¶

-

instrumentId¶

-

instrumentName¶

-

primary¶

-

runDetails¶

-

sigProcVer¶

-

wellSample¶

-

-

class

pbcore.io.dataset.DataSetMembers.CollectionsMetadata(record=None)¶ Bases:

pbcore.io.dataset.DataSetMembers.RecordWrapperThe Element should just have children: a list of CollectionMetadataTags

-

__getitem__(index)¶

-

__iter__()¶

-

merge(other)¶

-

-

class

pbcore.io.dataset.DataSetMembers.ContigMetadata(record=None)¶ Bases:

pbcore.io.dataset.DataSetMembers.RecordWrapper-

digest¶

-

length¶

-

-

class

pbcore.io.dataset.DataSetMembers.ContigSetMetadata(record=None)¶ Bases:

pbcore.io.dataset.DataSetMembers.DataSetMetadataThe DataSetMetadata subtype specific to ContigSets.

-

contigs¶

-

merge(other)¶

-

-

class

pbcore.io.dataset.DataSetMembers.ContigsMetadata(record=None)¶ Bases:

pbcore.io.dataset.DataSetMembers.RecordWrapper-

__getitem__(index)¶

-

__iter__()¶

-

merge(other)¶

-

-

class

pbcore.io.dataset.DataSetMembers.ContinuousDistribution(record=None)¶ Bases:

pbcore.io.dataset.DataSetMembers.RecordWrapper-

binWidth¶

-

bins¶

-

description¶

-

labels¶ Label the bins with the min value of each bin

-

maxBinValue¶

-

maxOutlierValue¶

-

merge(other)¶

-

minBinValue¶

-

minOutlierValue¶

-

numBins¶

-

sample95thPct¶

-

sampleMean¶

-

sampleMed¶

-

sampleSize¶

-

sampleStd¶

-

-

class

pbcore.io.dataset.DataSetMembers.CopyFilesMetadata(record=None)¶ Bases:

pbcore.io.dataset.DataSetMembers.RecordWrapperThe CopyFile members don’t seem complex enough to justify class representation, instead rely on base class methods

-

class

pbcore.io.dataset.DataSetMembers.DataSetMetadata(record=None)¶ Bases:

pbcore.io.dataset.DataSetMembers.RecordWrapperThe root of the DataSetMetadata element tree, used as base for subtype specific DataSet or for generic “DataSet” records.

-

merge(other)¶

-

numRecords¶ Return the number of records in a DataSet using helper functions defined in the base class

-

provenance¶

-

summaryStats¶

-

totalLength¶ Return the TotalLength property of this dataset. TODO: update the value from the actual external reference on ValueError

-

-

class

pbcore.io.dataset.DataSetMembers.DiscreteDistribution(record=None)¶ Bases:

pbcore.io.dataset.DataSetMembers.RecordWrapper-

bins¶

-

description¶

-

labels¶

-

merge(other)¶

-

numBins¶

-

-

class

pbcore.io.dataset.DataSetMembers.ExternalResource(record=None)¶ Bases:

pbcore.io.dataset.DataSetMembers.RecordWrapper-

addIndices(indices)¶

-

indices¶

-

merge(other)¶

-

metaType¶

-

resourceId¶

-

-

class

pbcore.io.dataset.DataSetMembers.ExternalResources(record=None)¶ Bases:

pbcore.io.dataset.DataSetMembers.RecordWrapper-

__getitem__(index)¶

-

__iter__()¶

-

addResources(resourceIds)¶ Add a new external reference with the given uris. If you’re looking to add ExternalResource objects, append() or extend() them instead.

- Args:

- resourceIds: a list of uris as strings

-

resourceIds¶

-

resources¶

-

sort()¶ In theory we could sort the ExternalResource objects, but that would require opening them

-

-

class

pbcore.io.dataset.DataSetMembers.FileIndex(record=None)¶ Bases:

pbcore.io.dataset.DataSetMembers.RecordWrapper-

metaType¶

-

resourceId¶

-

-

class

pbcore.io.dataset.DataSetMembers.FileIndices(record=None)¶ Bases:

pbcore.io.dataset.DataSetMembers.RecordWrapper-

__getitem__(index)¶

-

__iter__()¶

-

-

class

pbcore.io.dataset.DataSetMembers.Filter(record=None)¶ Bases:

pbcore.io.dataset.DataSetMembers.RecordWrapper-

__getitem__(index)¶

-

__iter__()¶

-

addRequirement(name, operator, value)¶

-

merge(other)¶

-

plist¶

-

pop(index)¶

-

removeRequirement(req)¶

-

-

class

pbcore.io.dataset.DataSetMembers.Filters(record=None)¶ Bases:

pbcore.io.dataset.DataSetMembers.RecordWrapper-

__getitem__(index)¶

-

__iter__()¶

-

addRequirement(**kwargs)¶ Use this to add requirements. Members of the list will be considered options for fulfilling this requirement, all other filters will be duplicated for each option. Use multiple calls to add multiple requirements to the existing filters. Use removeRequirement first to not add conflicting filters.

- Args:

- name: The name of the requirement, e.g. ‘rq’ options: A list of (operator, value) tuples, e.g. (‘>’, ‘0.85’)

-

merge(other)¶

-

opMap(op)¶

-

registerCallback(func)¶

-

removeRequirement(req)¶

-

testCompatibility(other)¶

-

testParam(param, value, testType=<type 'str'>, oper='=')¶

-

tests(readType='bam', tIdMap=None)¶

-

-

class

pbcore.io.dataset.DataSetMembers.Parameter(record=None)¶ Bases:

pbcore.io.dataset.DataSetMembers.RecordWrapper-

name¶

-

operator¶

-

value¶

-

-

class

pbcore.io.dataset.DataSetMembers.Parameters(record=None)¶ Bases:

pbcore.io.dataset.DataSetMembers.RecordWrapper-

__getitem__(index)¶

-

__iter__()¶

-

merge(other)¶

-

-

class

pbcore.io.dataset.DataSetMembers.ParentTool(record=None)¶

-

class

pbcore.io.dataset.DataSetMembers.PrimaryMetadata(record=None)¶ Bases:

pbcore.io.dataset.DataSetMembers.RecordWrapper- Doctest:

>>> import os, tempfile >>> from pbcore.io import SubreadSet >>> import pbcore.data.datasets as data >>> ds1 = SubreadSet(data.getXml(5)) >>> ds1.metadata.collections[0].primary.resultsFolder 'Analysis_Results' >>> ds1.metadata.collections[0].primary.resultsFolder = ( ... 'BetterAnalysis_Results') >>> ds1.metadata.collections[0].primary.resultsFolder 'BetterAnalysis_Results' >>> outdir = tempfile.mkdtemp(suffix="dataset-doctest") >>> outXml = 'xml:' + os.path.join(outdir, 'tempfile.xml') >>> ds1.write(outXml, validate=False) >>> ds2 = SubreadSet(outXml) >>> ds2.metadata.collections[0].primary.resultsFolder 'BetterAnalysis_Results'

-

automationName¶

-

collectionPathUri¶

-

configFileName¶

-

copyFiles¶

-

resultsFolder¶

-

sequencingCondition¶

-

class

pbcore.io.dataset.DataSetMembers.Provenance(record=None)¶ Bases:

pbcore.io.dataset.DataSetMembers.RecordWrapperThe metadata concerning this dataset’s provenance

-

createdBy¶

-

parentTool¶

-

-

class

pbcore.io.dataset.DataSetMembers.RecordWrapper(record=None)¶ Bases:

objectThe base functionality of a metadata element.

Many of the methods here are intended for use with children of RecordWrapper (e.g. append, extend). Methods in child classes often provide similar functionality for more raw inputs (e.g. resourceIds as strings)

-

__getitem__(tag)¶ Try to get the a specific child (only useful in simple cases where children will not be wrapped in a special wrapper object, returns the first instance of ‘tag’)

-

__iter__()¶ Get each child iteratively (only useful in simple cases where children will not be wrapped in a special wrapper object)

-

__repr__()¶ Return a pretty string represenation of this object:

“<type tag text attribs children>”

-

addMetadata(key, value)¶ Add a key, value pair to this metadata object (attributes)

-

append(newMember)¶ Append to the actual list of child elements

-

description¶

-

extend(newMembers)¶ Extend the actual list of child elements

-

findChildren(tag)¶

-

getMemberV(tag, container='text')¶ Generic accessor for the contents of the children of this element, without having to interface with them directly

-

getV(container='text', tag=None)¶ Generic accessor for the contents of this element’s ‘attrib’ or ‘text’ fields

-

index(tag)¶ Return the index in ‘children’ list of item with ‘tag’ member

-

merge(other)¶

-

metadata¶ Cleaner accessor for this node’s attributes. Returns mutable, doesn’t need setter

-

metaname¶ Cleaner accessor for this node’s tag

-

metavalue¶ Cleaner accessor for this node’s text

-

name¶

-

pop(index)¶

-

pruneChildrenTo(whitelist)¶

-

removeChildren(tag)¶

-

setMemberV(tag, value, container='text')¶ Generic accessor for the contents of the children of this element, without having to interface with them directly

-

setV(value, container='text', tag=None)¶ Generic accessor for the contents of this element’s ‘attrib’ or ‘text’ fields

-

submetadata¶ Cleaner accessor for wrapped versions of this node’s children.

-

subrecords¶ Cleaner accessor for this node’s children. Returns mutable, doesn’t need setter

Return the list of tags for children in this element

-

value¶

-

version¶

-

-

class

pbcore.io.dataset.DataSetMembers.ReferenceSetMetadata(record=None)¶ Bases:

pbcore.io.dataset.DataSetMembers.DataSetMetadataThe DataSetMetadata subtype specific to ReferenceSets.

-

contigs¶

-

merge(other)¶

-

organism¶

-

ploidy¶

-

-

class

pbcore.io.dataset.DataSetMembers.RunDetailsMetadata(record=None)¶ Bases:

pbcore.io.dataset.DataSetMembers.RecordWrapper-

name¶

-

runId¶

-

-

class

pbcore.io.dataset.DataSetMembers.StatsMetadata(record=None)¶ Bases:

pbcore.io.dataset.DataSetMembers.RecordWrapperThe metadata from the machine sts.xml

-

adapterDimerFraction¶

-

controlReadLenDist¶

-

controlReadQualDist¶

-

insertReadLenDist¶

-

insertReadQualDist¶

-

medianInsertDist¶

-

medianInsertDists¶

-

merge(other)¶

-

numSequencingZmws¶

-

prodDist¶

-

readLenDist¶

-

readLenDists¶

-

readQualDist¶

-

readQualDists¶

-

readTypeDist¶

-

shortInsertFraction¶

-

-

class

pbcore.io.dataset.DataSetMembers.SubreadSetMetadata(record=None)¶ Bases:

pbcore.io.dataset.DataSetMembers.DataSetMetadataThe DataSetMetadata subtype specific to SubreadSets. Deals explicitly with the merging of Collections and BioSamples metadata hierarchies.

-

bioSamples¶ Return a list of wrappers around BioSamples elements of the Metadata Record

-

collections¶ Return a list of wrappers around Collections elements of the Metadata Record

-

merge(other)¶

-

-

class

pbcore.io.dataset.DataSetMembers.WellSampleMetadata(record=None)¶ Bases:

pbcore.io.dataset.DataSetMembers.RecordWrapper-

bioSamplePointers¶

-

comments¶

-

concentration¶

-

plateId¶

-

sampleReuseEnabled¶

-

sizeSelectionEnabled¶

-

stageHotstartEnabled¶

-

uniqueId¶

-

useCount¶

-

wellName¶

-

-

pbcore.io.dataset.DataSetMembers.filter_read(accessor, operator, value, read)¶